《machine-learning-mindmap》

4 Concepts

(Daniel Martinez)Motivation

Prediction

When we are interested mainly in the predicted variable as a result of the inputs, but not

on the each way of the inputs affect the prediction. In a real estate example, Prediction

would answer the question of: Is my house over or under valued? Non-linear models are

very good at these sort of predictions, but not great for inference because the models

are much less interpretable.

Inference

When we are interested in the way each one of the inputs affect the prediction. In a real

estate example, Prediction would answer the question of: How much would my house

cost if it had a view of the sea? Linear models are more suited for inference because the

models themselves are easier to understand than their non-linear counterparts.

Performance

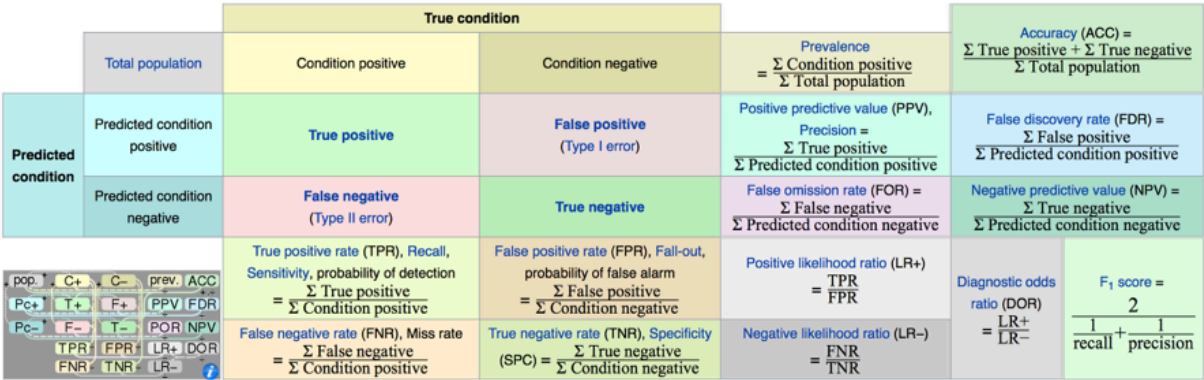

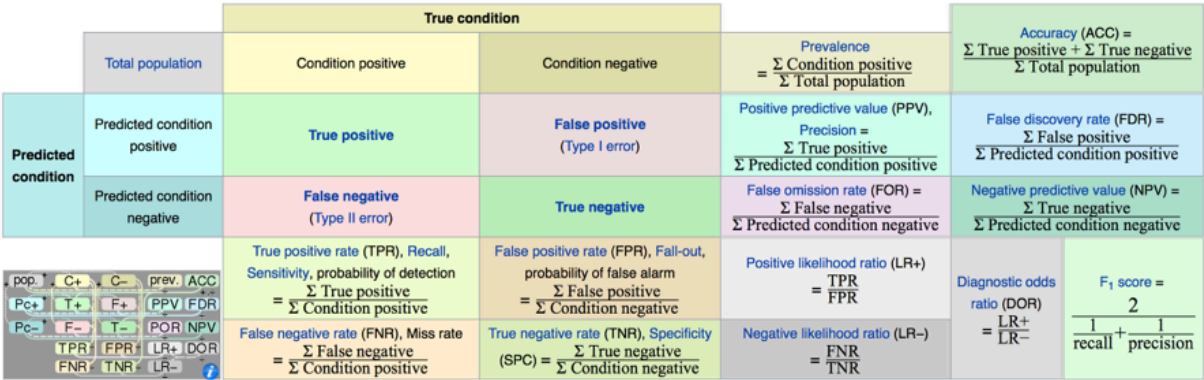

AnalysisConfusion Matrix

Accuracy

Fraction of correct predictions, not reliable as skewed when the

data set is unbalanced (that is, when the number of samples in

different classes vary greatly)

f1 score

Precision

Out of all the examples the classifier labeled as

positive, what fraction were correct?

Recall

Out of all the positive examples there were, what

fraction did the classifier pick up?

Harmonic Mean of Precision and Recall: (2 * p * r /(p + r))

ROC Curve - Receiver Operating

CharacteristicsTrue Positive Rate (Recall / Sensitivity) vs False Positive

Rate (1-Specificity)

Bias-Variance Tradeoff

Bias refers to the amount of error that is introduced by approximating

a real-life problem, which may be extremely complicated, by a simple

model. If Bias is high, and/or if the algorithm performs poorly even on

your training data, try adding more features, or a more flexible model.

Variance is the amount our model’s prediction would

change when using a different training data set. High:

Remove features, or obtain more data.

Goodness of Fit = R^2

1.0 - sum_of_squared_errors / total_sum_of_squares(y)

Mean Squared Error (MSE)

The mean squared error (MSE) or mean squared deviation

(MSD) of an estimator (of a procedure for estimating an

unobserved quantity) measures the average of the squares

of the errors or deviations—that is, the difference between

the estimator and what is estimated

Error Rate

The proportion of mistakes made if we apply

out estimate model function the the training

observations in a classification setting.

Tuning

Cross-validation

One round of cross-validation involves partitioning a sample of data into complementary subsets,

performing the analysis on one subset (called the training set), and validating the analysis on the

other subset (called the validation set or testing set). To reduce variability, multiple rounds of

cross-validation are performed using different partitions, and the validation results are averaged

over the rounds.

Methods

Leave-p-out cross-validation

Leave-one-out cross-validation

k-fold cross-validation

Holdout method

Repeated random sub-sampling validation